OSDI20——Fast-RDMA-based-Ordered-Key-Value-Store-using-Remote-Learned-Cache

Step 1

题目摘要引言

Title

Fast RDMA-based Ordered Key-Value Store usng Remote Learned

Related work: RDMA-based ordered Key-Value store like DrTM-Tree,Cell, eRPC+Masstree

Abstract

RDMA has gained considerable interests in network-attached in-memory key-value stores.

Q1: Why RDMA?

Challenge: traversing remote tree-based index in ordered stores ordered? oredered.

multiple roundtrips -> order-of-magnitude slowdown | limited scalability

Q1: Why need multiple roundtrips.

As for ML

perfect cache structured for the tree-based index.

Implementation: XSTORE

Key idea: decouple

Evaluation

compare to DrTM-Tree, Cell, eRPC+Masstree

Recent effort(way):

propose index caching to reduce RDMA operations (doesn't work woell with the tree-based cache)

Introduction

In-memory key-value stores with network -> foundation of many datacenter applications. (database, dfs, web-services, severless computing)

A1: CPU becomes bottleneck and limits the scalability of increase of clients. RDMA bypass CPU. Then as for in-memory KV stores, thinking about RDMA-based KV, enable direct access to the momory of remote machines with low latency

Inspired by recent research using ML models an alternative index structure.

As for ML. Core idea.

The client uses learned cache to predict a small range of positions for a lookup key and then fetches them using one RDMA READ. After that, the client uses a local search (e.g., scanning) to find the actual position and fetches the value using another RDMA READ.

Challenge: using ML models. slow(frequently retraining ML models) and costly(keeping data in order) for dynamic workloads.

Solution: hybrid architecture, dynamic workloads(insert) -> tree-based index; static workloads(gets and scans)

->

a layer of indirection

Q3: why need a layer of indirection to translate

Conclusion in summary

- learned cache

- hybrid architecture

layer of indirection

prototype named XSTORE

基本理论概况

结论部分

回答基本问题

类别

对原型系统进行描述 XSTORE

内容

RDMA 相关,以前未曾涉及

正确性

有效

创新点

使用 ML 来建模 Cache,

清晰度

比较清晰

阅读选择

继续阅读

Step 2

细读笔记

RDMA-based Key-Value Store

KV: in-memory key-value store

network-attached: client-server model

tree-backed: range index structures

KV interfaces including \(GET(K)\), \(UPDATE(K,V)\), \(SCAN(K,V)\), \(INSERT(K,V)\) and \(DELETE(K**\)

architecture design

Server-centric design(S-RKV):

- Reimplement the communication layer (e.g. RPC) using RDMA primitives.

- This design allows access to the server-side store with only two RDMA operations no matter how complex the index structures are.

- Client-direct design(C-RKV):

- Bypass server cpu

- May consume extra network round trips for traversing th tree-based index.

- This commomn choice is also motivated by the read-dominated nature of most applications.

- index cache: cache top levels(due to the large tree-based index) to reduce network round trips.

Analysis and Motivations

- CPU is the primary scalability bottleneck in the sever-centric design

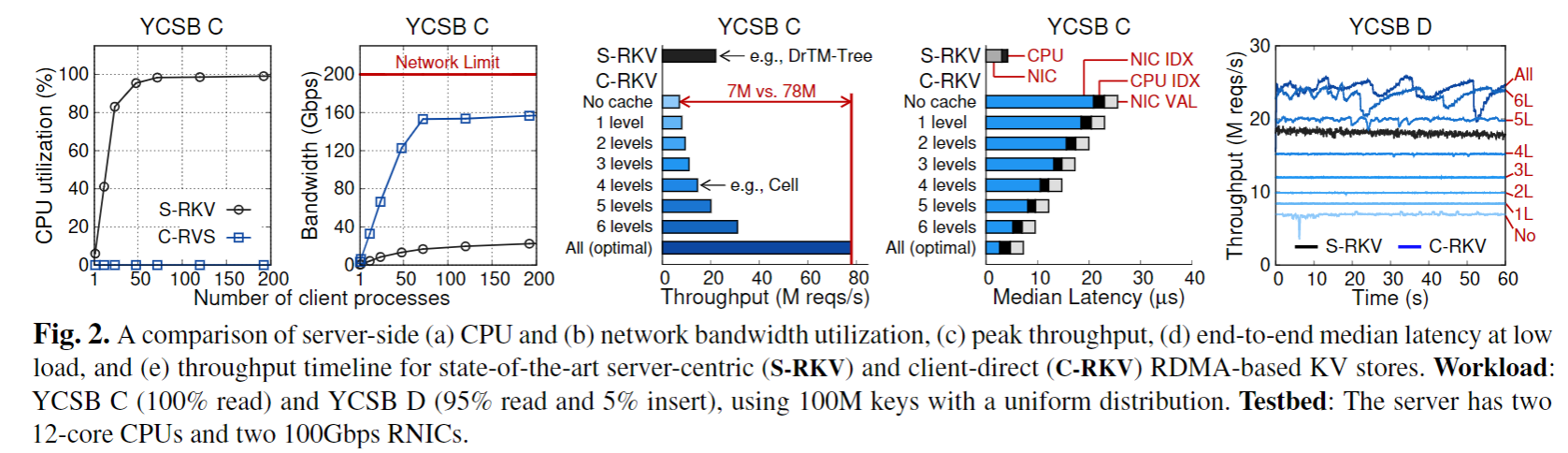

- Fig.2a shows that the S-RKV design makes the server rapidly saturates all CPUs

- S-RKV just consumes 11% of network bandwith and CPU first becomes the performance bottleneck

- Costly RDMA-based traversal is the key obstacle in the client-direct design

- Fig. 2c sgiws RDMA-based traversal limits the peak throughput of C-RKV to 7 million requests per second, even much lower than that of S-RKV.

- 4 level cache (down from 8) Cell peaks at 14.5M reqs/s

- Tree is not a proper structure for RDMA-based index cache

- index is large. 理论最好情况(全部 cache)能达到 78M reqs/s,是 S-RKV 最好情况 24M reqs/s 的 3.3 倍。

- memory-intensive but low-compute operation

- updates to the tree-based index might progagate the changes from the leaf level to the root node, sothat the index updates would probably invalidate the cache recursively

An interesting dimension in the NAM architecture is caching of hot index nodes in compute servers that are frequently accessed. Caching allows compute servers to avoid remote memory transfers from memory servers. This is similar to the co-location of compute and memory servers.

For tree-based indexes, where inserts and deletes might propagate up to the index from the leaf level to the root node, we observed that cache invalidation is even a more severe issue since one insert/delete operation might need to invalidate multiple cached index nodes.

Approach and Overview

Using ML Models: Inspired by learned index

Cumulative distribution function(CDF): map the sorted keys to the actual position, \(P = CDF(K)\)

Models: Approximate the shape of a CDF using ML models, like NN, LR. Given a lookup key (K), the model (the black curve) can predict a position (pos) with a min- and max-error, and a local search (e.g., scanning) around the prediction is used to get the actual position.

From the given key to a sorted array.

Learned Cache: The key idea behind XSTORE.

- First, cache whole(compared to partial) index at the cost of accuracy.

- reduce the network round trips

- memory-efficient

- Second, approximately predict a range of positions for one lookup key simply (a single multiplication and addition like linear regression)

- also reduce end-to-end latency even compared to a whole-index cache.

- Finally, instead of fine-grained and recursive invalidation in the tree-based cache, ML model can delay due to approximate predictions.

- Save invalidation cost compared to a whole-index cache.

- First, cache whole(compared to partial) index at the cost of accuracy.

- Challenge: Dynamic Workloads

- dynamic workloads (e.g., inserts and deletes) violated the "sorted" assumption

- Model retraining is slow and costly

- Alternative: intros delta index (server centric?) would incur more network round trips and severely increase the latency.

How to make learned cache keep pace with dynamic workloads at low cost becomes a key challenge.

- Overview of XStore:

- Server hosts a B+tree index (XTREE) which store kv pairs at the leaf level physically.

- Client use library to interact with server and host a local learned cache

- Client-direct design for read-only requests and the server-centric design for the rest.

- Server-centric design, transfer to remote

Design and Implementation

XTree: at the server, a B+tree index(XTREE) and stores key-value pairs at the leaf level physically.

- optimized for remote reads in leaf node

- 24-bit incarnation

- 8-bit counter

- 32-bit right-link pointer to next sibling

- keys with n slots and values with n slots

XCache with TT: at the client, a local learned cache (XCache) with consists of a-level recursive ML model and a translation table (TT)

- virtual address: Predict a range of positions (POS[..]) within a sorted array (logically stitching together all leaf nodes of XTREE)

- TT is located by the leaf-node number (LLN) of a valid bit, a 31-bit actual leaf-node number (ALN). 24-bit incarnation and 8-bit counter.

Training models and TT:

- Read code

- train each sub-model on a sorted array of its keys with a private logical position at a leaf node granularity (line 12-21) and calculate min- and max-error for every sub-model.

- note that the keys in the leaf node across sub-models will be trained by both of sub-models (in several kset[i] due to the same predict of \(xmodel.top.predict(k) \times M\)).

- Each sub-model has independent logical positions and an own translation table, making it easy to retrain a sub-model individually when necessary.

- A memory-performance trade-off:

- model is small but TT is large

- XMODEL with 500K sub-models only needs less than 6.7MB(14B per model)

- 100M keys, suppose each leaf node has 16 slots and is half-full, TT requires nearly 100MB. ( 100M * 64(bit) / 8(slots) /8(B/bit))

- cache on demand

Client-direct Operations

GET

Read code eaxmple. 根据图 7 展示的 LOOKUP 流程我们知道模型预测的是一组 leaf node ID,根据 TT 表转译并通过 door bell batching 技术(该优化技术帮助我们用一次 RDMA READ 读取非连续的内存区域信息)读取到远程内存中叶子节点的信息,然后在本地进行 local search,计算所要 key 的实际地址。

most likely to read just one leaf node due to the low predection error of XMODEL

invalid in TT entry(line 9 and 17) would result in a fallback path, which ships the get operation to the server and fetches updated models and TT entries using a single request(i.e., server-centric design).

SCAN

find N key-value pairs (in order by key) starting with the next key at or after K.

- uses the lookup operation with K to determin the remote address of the first key-value pair

- predicts the leaft nodes that contain next n key-value pairs with the help of TT(provides CNT and ALN in sorted order by key).

- use one RDMA READ with doorbell batching to fetch these leaf nodes

Non-existent Keys

Non-existent key 的 read,预测的键范围应该 cover 键本身。两层结构对于出于 sub-model 边界的 key 可能会产生错误预测。

解决办法:增加训练集,仅增加一个边界键到相邻两个 sub-models 的训练集中。

- 由于两个子模型之间叶节点的 keys 都经过了训练,在大多数情况下不需要进行数据扩充。

Server-centric Operations

- Correctness: no lost keys

- Concurrency: HTM-based concurrent B+tree, HTM 区域+RDMA 强一致性(终止访问统一内存位置的 HTM 事务)。缓存那里不太理解。

UPDATE

Update to the value will not change the index, so that it will also not influence the learned cache and belongs to static worklodas.

Optimization: position hint. Still benefit from the learned cache.

INSERT and DELETE

in-place inserts and deletes: XTREE chooses not to keep key-value pairs and reduces working set in the HTM region. look up based on the learned cache will not be affected since it fetches all keys

The oirginal leaf node should increment its incarnation, which makes the clients realize the split.

Retraining and invalidation: The insert of a new leaf node will break the sorted(logical) order of leaf nodes and cause model retraining. Decouple model retraining from index updating until overlapped with a split.

Server 端后台独立进行 sub-model 的训练以及新 TT 表的构建。

The incorrect prediction can be detected by incarnation mismatch between the leaf node an cached TT entry (line 17 in Fig. 7) and results in a fallback, which ships the operation to the server. The client will update XCACHE with a retrained model and its translation table fetched by the fallback.

Optimization: speculative execution: A split of leaf node just moves the second half of key-value pairs (sorted by key) to its new sibling leaf node. Therefore, the prediction to the split node must still be mapped to this node or its new sibling. 到拆分节点的映射必须拆分到该节点(移除后半部分)或者它右侧的同级节点。Based on this observation, speculative execution is enabled to handle the lookup operation on a stale TT entry (i.e., incarnation check is failed). The client will still find the look up key in the keys fetched from the split leaf node. If not found, the client will use its right-link pointer to fetch (the second half) keys from its sibling (one more RDMA READ). It means there is roughly half of the chance to avoid incurring a performance penalty. Currently only consider one sibling before using a fallback. This optimization is important for insert-dominate workloads (e.g., YCSB D) since insert operations and retraining tasks might keep server CPUs busy; the fallbacks will also take server CPU time.

Model expansion: increase the number of sub-models in XMODEL at once (e.g., doubling) when necessary

Durability

log writes for persistence and failure recovery

Reuse the existing durability mechanism in the concurrent tree-based index extended by XTREE, like version numbers.

Scaling out XSTORE

coarse-grained scheme,见文献 57

distribute an ordered key-value store span multiple servers (scale-out).

Assign key-value pairs based on a range-based partitioning function for keys.

Discussion

Support variable-length keys: a limit, now supports fixed-length key and variable/fixed-length value. fat key pointers and solutions

Data distribution: trade off among memory consumption of XCACHE, the retraining costs of XMODEL, and the performance of XSTRORE.

Evaluation

Set up

Testbed:

- 15 client machines

- 12-core Intel Xeon CPUs,

- 128GB of RAM, and

- 2 ConnectX-4 100Gbps IB RNICs

- RNIC is used by threads on the same socket and connected to a Mellanox 100Gbps IB Switch.

Workloads.

- mainly focus on YCSB

- A: update heavy

- B: read mostly

- C: read only

- D: read latest

- E: short ranges

- F: read-modify-write

- 100 million KV pairs initially (a 7-level tree-based index and a leaf level), 8-byte key and 8-byte value are used.

- mainly focus on YCSB

Answer questions

- Compare targests.

- DrTM-Tree

- eRPC+Masstree(noted as EMT)

- Cell(not open-source)

- RDMA-Memcached (noted as RMC)

YCSB Performance

Read-only workload (YCSB C):

- XSORE can achieve 82 million requests perscond (even a little higher than the optimal throughput)

- only uses one RDMA READ to fetch one leaf node per lookup.

- 18% drop in Zipfian distribution. suspec that

- 多个 clients 读取小范围内存

- 怀疑 RNIC 根据请求地址检查 RDMA 操作之间的冲突,即便没有冲突,这样的检查也会争夺 processing resources。

Static read-write workloads (YCSB A,B, and F):

- still bottlenecked by server CPUs for handling updates but better than server-centric design

- YCSB even higher YCSB C

- the read requests are less skewed interleaved with (10%) updates, compared to read-only workloads(YCSB C)

- the server of XSTORE has not been saturated ; thus itis still sufficient to perform updates, compared to update-heavy workloads(YCSB A)

- YCSB 75% between A and B

Dynamic workloads (YCSB D and E)

contention(performance slowdown) happs differently

- For DrTM-Tree and EMT, on tree-based index

- For XSORE and Cell, cache invalidations.

- overhead for XSTORE

- hard to learn than static so prediction error increase (dynamic workloads distribution is close to noised linear)

- optimal means a whole-index cache. 3x slower than static workload due to cache invalidations. latest distribution significantly reduces cache misses and invalidations, so is better than Uniform

CPU utilizations of XSTORE:

- no graphs

- saves server CPUs compared to sever-centric(under half compared to 100% in DrTM-Tree)

End-to-end latency:

- YCSB C uniform distribution(others similar)

- when low load, server-centric KVs have lower latency, as one RPC round trip is faster than two one-sided RDMA operations(NIC_RPC compare to NIC_IDX and NIC_VAL)

Production Workload Performance

similar to YCSB A

Scale-out Performance

Perform better with the increase of RNICs. 6 server RNICs in 3 server machines. up to 145M reqs/s which is limited by the number of client machines.

XSTORE needs about eight client RNICs to saturate one server RNIC

Model (Re-)Training and Expansion

训练速度需要与动态负载的 insertion 速度匹配,模型 retraining 跟不上会影响整体的 throughput。

**For dynamic workloads, the throughput of XSTORE would decrease whe stale sub-models can not retrained in time*

图 14 对比说明了该问题。

可以设定随着 keys 数增多,达到一定阈值后,重新训练 sub-models 增加的新模型。Model Expansion

问题记录

未读(且值得读)文献记录

State-of-the-art RDMA-based ordered KV Stores Design

Concurrent B+ Tree

Step 3

思路复现

关于两次 RDMA READ

email 询问作者魏星达的回复

问题名称:XTree 叶子节点的 Value 保存的是指具体的数据,还是指向数据部分的地址。

XSTORE 在 value 时定长的时候保存的是具体的数据,不定长的时候储存的是地址。

这里我疑惑如果保存的是具体数据,我们为什么还需要在这里确定 key 的“实际地址”后再进行一次 RDMA READ,value 值不是可以再叶子节点中读取到吗?

你的问题是正确的。理论来说,如果叶子存的是具体数据,那么一次 RDMA 就可以完全读回。这边 XSTORE 使用两次是为了节约 RDMA 读的 payload。

举例来说,如果使用一次 RDMA,则需要读取叶子里的所有数据加上 \(Key\),这样会读取过多的值 \((n * sizeof(Key) + n * sizeof(Value)\),其中 \(n\) 是叶子节点最多的 item 数量),造成 RDMA bandwidth 的浪费。

如果使用两次 RDMA,则总共需要读 \(n * sizeof(Key) + sizeof(Value)\) 数据,如果 \(Value\) 的大小比 \(Key\) 大很多(通常是这种情况),则会更加高效。

证明与推理复现

实验验证复现

Original Presentation

Introduce

- Traditional KVS uses RPC(Server-centric) -> kv will close focus on the server cpu.

lead to huge cpu cost with random reads (nic's bandwith > cpu frequency)

- One-sided RDMA(client-direct)

workload shift from the server cpu to the client cpu -> client-direct

simple read/write -> easy for hashtable but hard for tree-based index structures

QQ1: Why n RTT?

QCache:

- existing systems adopt caching

cost a huge amount of memory

copare the three designs of RDMA usage

XSTORE

- Server-centric updates

- Because one-sided has simple semantic

insert -> tree split and let it to the remote CPU

Learned cache

Realted: Learned index

索引是模型:b 树索引可以看作是一个模型,它是一个记录在排序数组中的位置的键

Key --index model--> Address

Client-direct get() using learned cache

first train model at the server(small memory usage)

transfer from server to the client

Note: learned model assumes that the KV are stored in a sorted array

- #1 1 RTT for lookup (positions -> keys)

- #2 Small memory footprint

Challenge: Dynamic insertions/deletions? Leaned model assumes a sorted array(inefficient to support for insertions and deletions

XSTORE

Client-> model & TT

2020/12/14 meeting

RDMA design

Cache

- Cache too large

- Cache miss

- Random acces

Why 2 times RDMA -> TT

Decouple insert O1 challenge

ML correct about the insertion

Training

2020/12/19 meeting

- B+ 树设计 Cache ,positions 预测

- TT 表的理解

- CDF 曲线样式可能和

自己的序号+1,为什么原来的 XCAHE 模型可以,

新的问题

retraining,保证你本地的 TT 表能更新,能支持动态的 workloads

client 端 TT 表更新策略

HTM transaction

接近 1h:18min,拓展

补:RDMA 上的工作,引入 Learned Cache,

Contents

- Background

- Motivation

- Analysis 3. 原

- Learned Cache 4 Cache 模型本身设计问题,

Contribution 部分

- 阶段性总结

- Model

- Hybrid architecture

Implementation

- XSTORE Cache + TT 表

- Evalutaion(图表?)

- Conclution

- 搬运原有文章

本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!